Tesla has been promising autonomous driving for over a decade, although this dream might be as distant as ever. The most advanced version of Tesla Full Self-Driving is still in beta, and the progress has been minimal. Here's how everything started and where it's heading.

Tesla is credited with jumpstarting the EV revolution with the launch of the Roadster in 2008. It was an impressive EV at launch and a sought-after collectible today, but it was nothing more than a testbed for Tesla's electric powertrain. The Roadster, launched in 2008, was based on a Lotus Elise chassis and sold in limited numbers between 2008 and 2012.

Although the Roadster was the first series production EV fitted with a Li-ion battery, the Model S was Tesla's first model built from the ground up to demonstrate to the world that electric vehicles can be desirable. As Elon Musk let us all know in his 2006 Master Plan, launching the Model S was step two, with the next step being launching an even more affordable electric car. That arrived in 2017 as the Model 3, a true mass-market EV that established Tesla as a carmaker.

Even before the Model 3 became a success story (it was also about to bankrupt Tesla in the process), Elon Musk published Master Plan Part Deux in 2016. This was a very ambitious manifesto that is still not complete today. Besides expanding the Tesla EV lineup to cover all forms of terrestrial transport and integrating energy generation with battery storage, the Master Plan Part Deux introduced a new concept: Autonomy.

Elon Musk started talking about Autopilot three years before Master Plan Part Deux was published. He made no secret that he was inspired by aviation when designing and naming the system. This also led to confusion among Tesla customers, who thought that similar to planes, their cars could drive themselves while Autopilot was engaged. Some paid with their lives for this confusion, with the small consolation that their sacrifice contributed to improving the system and regulations to this day.

The first Tesla customers could pre-purchase Autopilot in October 2014, when Tesla cars were already manufactured with the necessary hardware to support it. The first Autopilot software release arrived in October 2015 with Tesla software version 7.0. Within one year, when Master Plan Part Deux was published, Autopilot had already accumulated 300 million miles (500 million km), with the Tesla fleet adding 3 million miles per day. Tesla considered that worldwide regulatory approval would require something on the order of 6 billion miles (10 billion km).

Hardware 1 was based on MobilEye's EyeQ3 platform and relied on a single monochrome camera, radar, and ultrasonic sensors to provide advanced driving assistance (ADAS) features. These included automatic emergency braking (AEB), traffic-aware cruise control (TACC), lane-keeping, and lane-centering assist (LKA, LDA, LDW). HW1 vehicles also provided Autopark and Summon.

Tesla's partnership with MobilEye ended abruptly in 2016 after the first deadly crash of a Model S with active Autopilot. MobilEye's CEO Amnon Shashua accused Tesla of "pushing the envelope in terms of safety" and that Autopilot was a "driver assistance system" and not a "driverless system." After this episode, Tesla switched to Nvidia for its Autopilot hardware.

Hardware 2, or Autopilot 2, arrived in October 2016 and featured the Nvidia Drive PX2 AI computing system and a comprehensive sensor set. This included three forward cameras (narrow, main, and wide), two forward-looking and two rearward-looking side cameras, one rear camera, a radar sensor, and 12 ultrasonic sensors. This set of sensors was used until Hardware 4 launched in February 2023, although Tesla removed the radar in May 2021 and the ultrasonic sensors in October 2022.

Hardware 2 Teslas were initially crippled compared to HW1-equipped vehicles, missing some notable features like Summon until March 2017. This happened again with HW4, which only recently gained all the capabilities of HW3 vehicles. Tesla introduced Enhanced Autopilot on cars equipped with Hardware 2, with Navigate on Autopilot as its signature feature. This allowed changing lanes without driver input and navigating on-ramps and off-ramps.

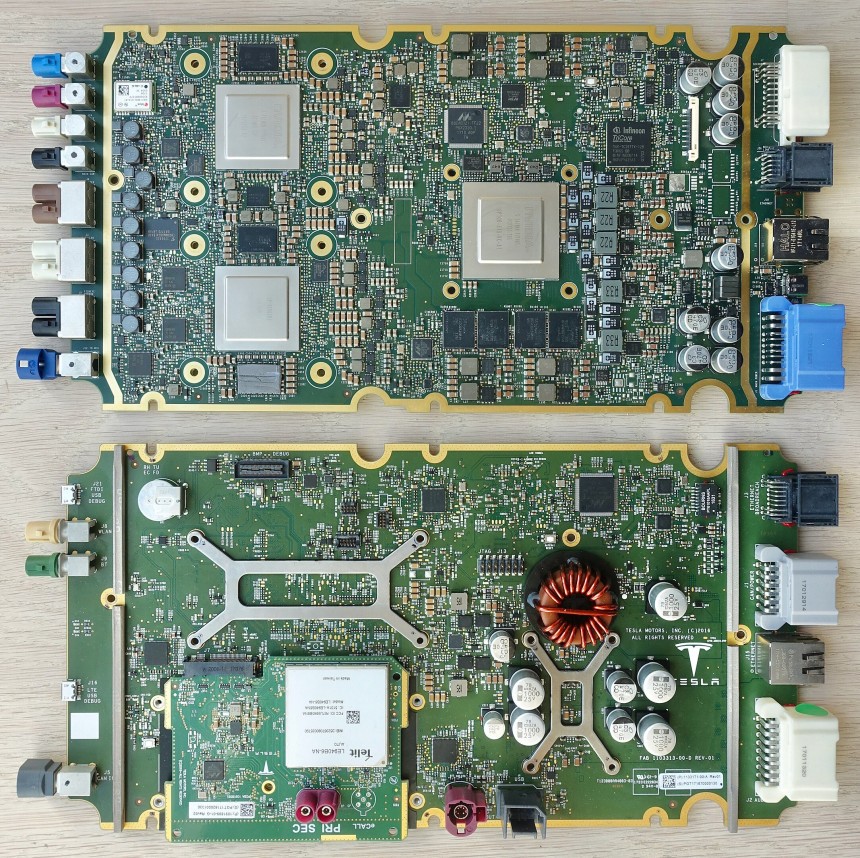

Tesla ran into a computational bottleneck with existing hardware by 2018, significantly hindering the company's self-driving efforts. Hardware 3 launched in 2019 to allow running neural networks on the vehicle and process vast amounts of data. With Hardware 3, Tesla introduced its custom-designed chips, aptly called "FSD Chips." Tesla said the proprietary chips could process images at 2,300 frames per second while each of the two neural network arrays on a single FSD Chip could perform 36 trillion operations per second. Hardware 3 featured two FSD chips for redundancy.

Tesla claimed that HW3 was necessary to run its Full Self-Driving software and later extended the claim to say that it was also enough to reach full autonomy. The HW3 computer was offered to customers with HW2 and HW2.5 who purchased the FSD package as a free retrofit. Four years later, Hardware 4 was introduced on the revamped Model S and Model X, changing the autonomy books. But until then, Elon Musk had a crazy idea.

On vehicles without radar sensors, some features (Smart Summon, Emergency Lane Departure Avoidance) were disabled, while others (traffic-aware cruise control) were speed-limited. Tesla promised to reinstate these features with an upcoming software update "in the weeks ahead." It was more like "the months ahead" because Tesla reintroduced the missing functions in July 2021. Later, it removed the radar functionality even on older vehicles that still had radar sensors.

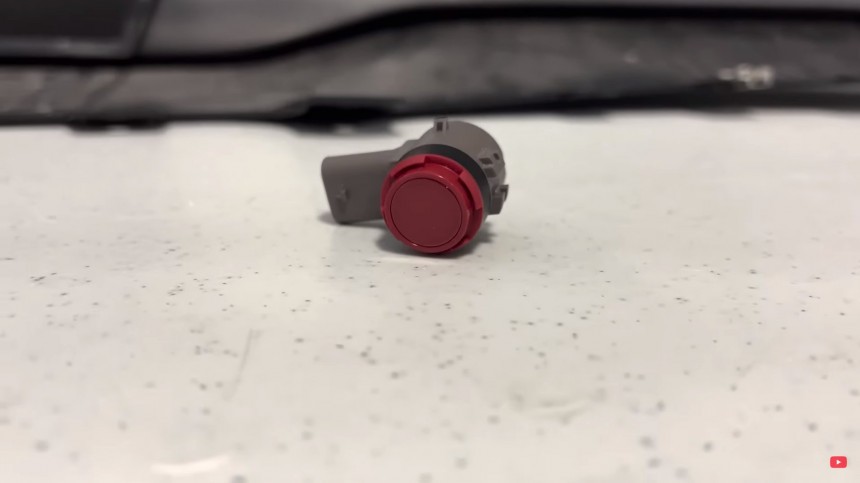

Removing the radar was only the first step. In October 2022, Tesla also scrapped the ultrasonic sensors. In the grand scheme of things, ultrasonic sensors were considered probably less important for achieving the goal of full self-driving. However, for Tesla owners, removing these tiny sensors had broader implications. Suddenly, Tesla EVs became less appealing than the cheapest vehicles on the market, many featuring park assist. Teslas, not anymore.

It wasn't only Park Assist features that disappeared, but also Smart Summon and Auto Park. For owners of the Tesla Model X, the auto-presenting doors feature was severely crippled. One year later, only park distance measurement has been reinstated, with mixed results. So far, despite Elon Musk's optimism, Tesla Vision has been nothing but a major disappointment for owners.

This means Tesla finally admitted that radar is essential, and the blind spot in front of the car cannot be avoided without ultrasonic sensors or a new camera. It also meant that not all Teslas were equipped for fully autonomous driving, as Musk promised. Otherwise, why would you need radar and additional cameras?

Hardware 4 also introduced a significant upgrade in processing power and better cameras. However, it took Tesla more than half a year to make it work, irking those who bought a Model S, Model X, and, later, Model Y with HW4 computer.

Tesla Autopilot is today offered on every Tesla as part of the standard configuration. It provides basic features like Traffic-Aware Cruise Control (TACC) and Autosteer, which are common on many car models nowadays. Autopilot can match the speed of your car to that of the surrounding traffic and helps keep the car centered within a clearly marked lane by making steering adjustments.

With the Hardware 2, Tesla introduced Enhanced Autopilot, a more capable version of the original software. It was (and still is) an optional upgrade that adds semi-autonomous driving features in certain conditions (highways). These include Navigate on Autopilot, which actively guides the vehicle from a highway's on-ramp to off-ramp, suggests lane changes, and automatically engages the turn signals. When Autosteer is engaged, it can perform automatic lane changes, making long journeys more bearable.

Enhanced Autopilot includes features like Autopark, Summon, and Smart Summon, although not all are available on all car models. Notably, Autopark and Smart Summon are not offered to non-USS vehicles for the time being. This makes Enhanced Autopilot less worthy of its money, which currently amounts to $6,000. Despite current limitations, Tesla still promises all of them to prospective buyers, which is misleading.

Recently, Tesla expanded FSD Beta to all Tesla owners in North America, so the Full Self-Driving Capability now lists Autosteer on city streets as one of its features. This only stands for those who buy the package in the US and Canada. European customers (and those from other continents) still don't have access to this advanced feature, and there's no timeline. Tesla was expected to start tests in Europe this year, but there's no information on this.

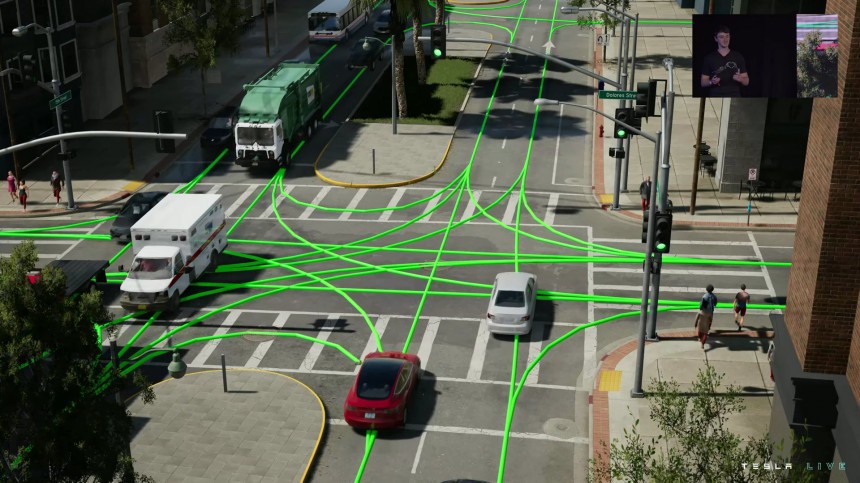

FSD Beta is an intermediary step toward the ultimate goal, which is full autonomy. Elon Musk is convinced that autonomous driving can make or break Tesla and is willing to bet anything on it. Still, recent controversies surrounding autonomous driving projects, including Cruise, indicate that AD companies might not be so far ahead in achieving full autonomy. Cruise admitted that human operators were required to intervene every 2-5 miles, which basically rules out Cruise robotaxis as autonomous vehicles.

An important event for Tesla FSD development happened when the NHTSA started investigating the system at the start of this year. The agency discovered that the software might "infringe upon local traffic laws or customs" while driving the car in specific conditions. These include traveling through an intersection during a stale yellow traffic light, failing to stop completely at a stop sign, or traveling straight through an intersection while in a turn-only lane.

In light of these findings, Tesla completely paused FSD development until it could offer a solution, which, like other safety fixes, was named "recall." This irritated Tesla fans, but not as much as not having FSD updates for a while. The pause ended in March when Tesla offered a fix to the issues raised by the NHTSA with the 2022.45.10 OTA update. Tesla also edited its website to clarify that FSD Beta is an SAE Level 2 driver assist system, not an autonomous driving system.

Tesla is currently working on FSD V12, which, according to Elon Musk, will mark the completion of the beta program. Unfortunately, Musk has been captivated by Twitter/X lately and hasn't updated its followers and Tesla fans about the FSD V12 progress. The latest we've seen was in August when Musk demoed the FSD Beta V12 live with less-than-impressive results. At about the same time, Tesla cut the price of the FSD package by $3,000 to $12,000. This doesn't sound very confident, in my opinion.

Still, Musk remains committed to bringing a robotaxi onto the streets as soon as possible. This was the only thing that convinced him to reconsider the idea of an affordable EV. According to Walter Isaacson's book on Elon Musk, the Tesla CEO was finally convinced that building the compact EV and the robotaxi using the same EV platform would bring down the costs through economy of scale. Only a high-volume model would offset the high development costs of the Gen-3 platform.

Rough estimates indicate that Tesla will probably launch the Gen-3 vehicles in 2025, so it will need to speed up FSD development. Many are skeptical that Tesla could solve autonomy by then, but I am rather optimistic. Autopilot launched less than a decade ago, while FSD is only four years old, but it's easy to see the progress. Even though Musk is absent from the agora with updates about FSD, Tesla engineers certainly keep working on it.

Although the Roadster was the first series production EV fitted with a Li-ion battery, the Model S was Tesla's first model built from the ground up to demonstrate to the world that electric vehicles can be desirable. As Elon Musk let us all know in his 2006 Master Plan, launching the Model S was step two, with the next step being launching an even more affordable electric car. That arrived in 2017 as the Model 3, a true mass-market EV that established Tesla as a carmaker.

Even before the Model 3 became a success story (it was also about to bankrupt Tesla in the process), Elon Musk published Master Plan Part Deux in 2016. This was a very ambitious manifesto that is still not complete today. Besides expanding the Tesla EV lineup to cover all forms of terrestrial transport and integrating energy generation with battery storage, the Master Plan Part Deux introduced a new concept: Autonomy.

Tesla Autopilot: How everything started

This concept started a major push to replace the human driver behind the steering wheel with a fully automated driving system. Based on the second master plan, this system could be ten times safer than humans, saving countless lives. But before reaching that point, Tesla's autonomous driving features had already made waves, started controversies, and were harshly criticized. And everything began with naming the first iteration "Autopilot."Elon Musk started talking about Autopilot three years before Master Plan Part Deux was published. He made no secret that he was inspired by aviation when designing and naming the system. This also led to confusion among Tesla customers, who thought that similar to planes, their cars could drive themselves while Autopilot was engaged. Some paid with their lives for this confusion, with the small consolation that their sacrifice contributed to improving the system and regulations to this day.

Hardware: what are Hardware 1 and HW2-HW4

Autopilot software requires specialized hardware to gather and process data. Naturally, the first iteration of Tesla Autopilot hardware was named "Hardware 1, " and the subsequent iterations were incremented accordingly. Regardless of the iteration, the Autopilot hardware comprises the Autopilot Sensor Suite to "see" and "sense" the surroundings and the Autopilot Computer to process the information and assist the driver. The latest Tesla Autopilot hardware suite is Hardware 4, or HW4, capable of supervised self-driving features, which technically still represents Level-2 autonomy.Hardware 1 was based on MobilEye's EyeQ3 platform and relied on a single monochrome camera, radar, and ultrasonic sensors to provide advanced driving assistance (ADAS) features. These included automatic emergency braking (AEB), traffic-aware cruise control (TACC), lane-keeping, and lane-centering assist (LKA, LDA, LDW). HW1 vehicles also provided Autopark and Summon.

Tesla's partnership with MobilEye ended abruptly in 2016 after the first deadly crash of a Model S with active Autopilot. MobilEye's CEO Amnon Shashua accused Tesla of "pushing the envelope in terms of safety" and that Autopilot was a "driver assistance system" and not a "driverless system." After this episode, Tesla switched to Nvidia for its Autopilot hardware.

Hardware 2, or Autopilot 2, arrived in October 2016 and featured the Nvidia Drive PX2 AI computing system and a comprehensive sensor set. This included three forward cameras (narrow, main, and wide), two forward-looking and two rearward-looking side cameras, one rear camera, a radar sensor, and 12 ultrasonic sensors. This set of sensors was used until Hardware 4 launched in February 2023, although Tesla removed the radar in May 2021 and the ultrasonic sensors in October 2022.

Hardware 2 Teslas were initially crippled compared to HW1-equipped vehicles, missing some notable features like Summon until March 2017. This happened again with HW4, which only recently gained all the capabilities of HW3 vehicles. Tesla introduced Enhanced Autopilot on cars equipped with Hardware 2, with Navigate on Autopilot as its signature feature. This allowed changing lanes without driver input and navigating on-ramps and off-ramps.

Redundancy finally arrived

In August 2017, Tesla upgraded the onboard processor and added redundancy with Hardware 2.5. It also upgraded the radar sensor to extend coverage to 558 feet/170 meters, a slight bump from 525 ft/160 m. Hardware 2.5 offered new features like Dashcam and Sentry Mode and, starting with the Model 3, a new cabin camera. This was intended for robotaxi operations, but Tesla activated it in 2021 to monitor driver attentiveness while using autonomous driving features.Tesla claimed that HW3 was necessary to run its Full Self-Driving software and later extended the claim to say that it was also enough to reach full autonomy. The HW3 computer was offered to customers with HW2 and HW2.5 who purchased the FSD package as a free retrofit. Four years later, Hardware 4 was introduced on the revamped Model S and Model X, changing the autonomy books. But until then, Elon Musk had a crazy idea.

Tesla Vision: Eyes are all we need

Elon Musk takes human beings as an example and considers that autonomous driving doesn't need more than cameras. With eight eyes on all four sides, Tesla vehicles could be more aware than humans, even without radar and ultrasonic sensors. Autonomous driving experts harshly criticized the move, although that didn't make Musk change his decision. Tesla eliminated the radar sensors from the Model 3 and Model Y in May 2021 and introduced limitations to how various assistance systems performed. Soon, the Model S and Model X followed.On vehicles without radar sensors, some features (Smart Summon, Emergency Lane Departure Avoidance) were disabled, while others (traffic-aware cruise control) were speed-limited. Tesla promised to reinstate these features with an upcoming software update "in the weeks ahead." It was more like "the months ahead" because Tesla reintroduced the missing functions in July 2021. Later, it removed the radar functionality even on older vehicles that still had radar sensors.

Removing the radar was only the first step. In October 2022, Tesla also scrapped the ultrasonic sensors. In the grand scheme of things, ultrasonic sensors were considered probably less important for achieving the goal of full self-driving. However, for Tesla owners, removing these tiny sensors had broader implications. Suddenly, Tesla EVs became less appealing than the cheapest vehicles on the market, many featuring park assist. Teslas, not anymore.

Hardware 4: big expectations, mixed results

Nevertheless, the latest stab for owners of Tesla EVs without radar and ultrasonics came when Tesla released Hardware 4. Progress is essential, but this time, it came with the middle finger aroused. Hardware 4 includes a radar sensor, at least for Model S and Model X, and is also designed to support an additional camera in the front bumper.This means Tesla finally admitted that radar is essential, and the blind spot in front of the car cannot be avoided without ultrasonic sensors or a new camera. It also meant that not all Teslas were equipped for fully autonomous driving, as Musk promised. Otherwise, why would you need radar and additional cameras?

Hardware 4 also introduced a significant upgrade in processing power and better cameras. However, it took Tesla more than half a year to make it work, irking those who bought a Model S, Model X, and, later, Model Y with HW4 computer.

Tesla Autopilot and Enhanced Autopilot

If the hardware supporting Tesla autonomous driving features seems complicated, the software is even more so. Even though Elon Musk thought Autopilot should drive the car itself at some point, the setbacks with Hardware 1 and MobilEye made him reconsider. Autopilot provided basic driver assist features but was incapable of driving the vehicle itself, as some tragedies revealed.Tesla Autopilot is today offered on every Tesla as part of the standard configuration. It provides basic features like Traffic-Aware Cruise Control (TACC) and Autosteer, which are common on many car models nowadays. Autopilot can match the speed of your car to that of the surrounding traffic and helps keep the car centered within a clearly marked lane by making steering adjustments.

With the Hardware 2, Tesla introduced Enhanced Autopilot, a more capable version of the original software. It was (and still is) an optional upgrade that adds semi-autonomous driving features in certain conditions (highways). These include Navigate on Autopilot, which actively guides the vehicle from a highway's on-ramp to off-ramp, suggests lane changes, and automatically engages the turn signals. When Autosteer is engaged, it can perform automatic lane changes, making long journeys more bearable.

Full Self-Driving is the ultimate goal

The Full Self-Driving package is the most complex (and expensive) tier of Tesla's software. Until recently, it only offered Traffic Light and Stop Sign Control in addition to the Enhanced Autopilot features to most people in North America. Select customers who were allowed to test more advanced features were given access to FSD Beta, which is capable of driving the car in most situations with minimal intervention.Recently, Tesla expanded FSD Beta to all Tesla owners in North America, so the Full Self-Driving Capability now lists Autosteer on city streets as one of its features. This only stands for those who buy the package in the US and Canada. European customers (and those from other continents) still don't have access to this advanced feature, and there's no timeline. Tesla was expected to start tests in Europe this year, but there's no information on this.

FSD Beta is an intermediary step toward the ultimate goal, which is full autonomy. Elon Musk is convinced that autonomous driving can make or break Tesla and is willing to bet anything on it. Still, recent controversies surrounding autonomous driving projects, including Cruise, indicate that AD companies might not be so far ahead in achieving full autonomy. Cruise admitted that human operators were required to intervene every 2-5 miles, which basically rules out Cruise robotaxis as autonomous vehicles.

Is it FSD autonomous driving software or just an advanced driver assistance system?

Like Autopilot, Full Self-Driving has known its fair share of controversies, starting with its name. People tend to take this too literally and are sometimes overconfident in technology. Inevitably, Teslas has been involved in crashes, and Autopilot and FSD have been suspected of causing them. Tesla, on its part, makes it very clear that both systems require active driver supervision and do not make the vehicle autonomous. But that didn't prevent people from suing and regulators from investigating.An important event for Tesla FSD development happened when the NHTSA started investigating the system at the start of this year. The agency discovered that the software might "infringe upon local traffic laws or customs" while driving the car in specific conditions. These include traveling through an intersection during a stale yellow traffic light, failing to stop completely at a stop sign, or traveling straight through an intersection while in a turn-only lane.

What's next with Tesla Full Self-Driving?

For now, Full Self-Driving software is in beta, and it still needs polishing. Version 11 is pretty good at navigating traffic with little to no human intervention. Your mileage may vary depending on the area and the amount of data Tesla gathered about local streets. Some users reported outstanding results, while others were utterly disappointed using the same FSD Beta software version.Tesla is currently working on FSD V12, which, according to Elon Musk, will mark the completion of the beta program. Unfortunately, Musk has been captivated by Twitter/X lately and hasn't updated its followers and Tesla fans about the FSD V12 progress. The latest we've seen was in August when Musk demoed the FSD Beta V12 live with less-than-impressive results. At about the same time, Tesla cut the price of the FSD package by $3,000 to $12,000. This doesn't sound very confident, in my opinion.

Still, Musk remains committed to bringing a robotaxi onto the streets as soon as possible. This was the only thing that convinced him to reconsider the idea of an affordable EV. According to Walter Isaacson's book on Elon Musk, the Tesla CEO was finally convinced that building the compact EV and the robotaxi using the same EV platform would bring down the costs through economy of scale. Only a high-volume model would offset the high development costs of the Gen-3 platform.

Rough estimates indicate that Tesla will probably launch the Gen-3 vehicles in 2025, so it will need to speed up FSD development. Many are skeptical that Tesla could solve autonomy by then, but I am rather optimistic. Autopilot launched less than a decade ago, while FSD is only four years old, but it's easy to see the progress. Even though Musk is absent from the agora with updates about FSD, Tesla engineers certainly keep working on it.