Reuters reminded us that Tesla will face two crucial lawsuits in September and October. Both arise from deaths that occurred while Autopilot was active. The whole legal discussion they will bring is fundamental not only to the future of Autopilot and Full Self-Driving but also to Tesla's as the world's most valuable car company in market cap – when VinFast grabs the third place in such a ranking, questioning it is a given. The deal is that these lawsuits may make several other carmakers follow the same steps, which may be pretty risky.

The September lawsuit is about Micah Lee's death. In 2019, his Model 3, allegedly on Autopilot, veered off the highway, struck a palm tree, and caught fire. Lee died, and two passengers were seriously hurt. His estate and the two passengers accuse Tesla of knowing Autopilot and other safety systems in the vehicle were defective. The case will be judged in California.

The second lawsuit is with a Florida court. The civil trial will decide in October whether the battery electric vehicle (BEV) maker is responsible for what happened to Stephen Banner. Just like Joshua Brown – the first person to die while using Autopilot – his Model 3 drove under the trailer of a truck and killed him.

In both situations, Tesla denies any defects with the beta software. In other words, it would have worked as intended. On top of that, the BEV maker also accused Lee of driving under the influence of alcohol and denied Autopilot was working when his car crashed. Jonathan Michaels represents the plaintiffs. He said Tesla makes false claims and that its "attempts to blame the victims for their known defective Autopilot system" are "shameful." This is just the beginning of legal battles that may shape all future lawsuits involving any advanced driver assistance systems (ADAS).

The winning arguments will define how automakers will sell these features: in a more conservative way or making the same – or worse – big promises that Tesla does. The BEV maker insists that Autopilot makes cars safer, which has already been debunked by the researcher Noah Goodall. He said that "much of the crash reduction seen by vehicles using Autopilot appears to be explained by lower crash rates experienced on freeways." When he adjusted the numbers for age demographics, the estimated crash rate actually increased by 10% when Autopilot was used. In other words, Tesla's ADAS made cars more dangerous, but the company insists on its narrative as if it were the correct one.

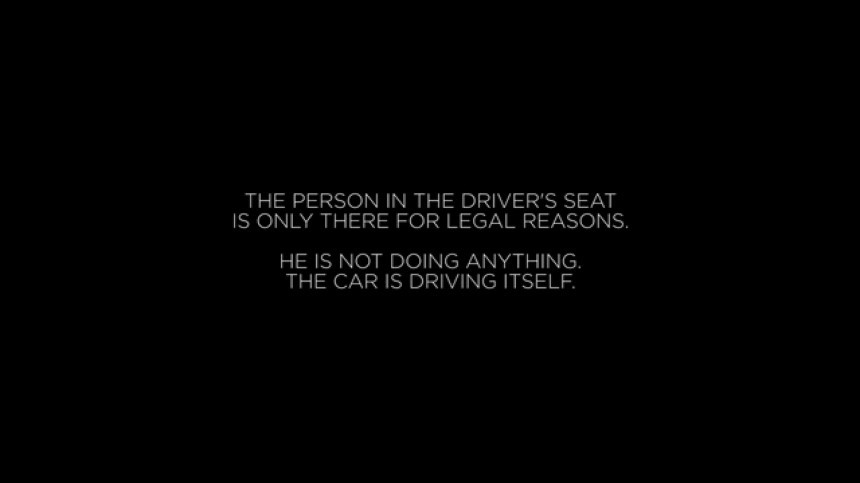

Everything changes when a crash happens: Tesla quickly evokes its legal disclaimer, which makes clear that the driver is in charge at all times. Summing up, when a car has no issues, that's because of Autopilot. When it collides, the blame is on the driver. Madeleine Clare Elish defined that as "moral crumple zones," which put users of new technologies to take "the brunt of the moral and legal responsibilities when the overall system malfunctions."

As Elish compared, "while the crumple zone in a car is meant to protect the human driver, the moral crumple zone protects the integrity of the technological system, at the expense of the nearest human operator."

The question is whether the system malfunctions or just works like that, which will be tricky to answer – with profound legal consequences. It is among the doubts Philip Koopman raised about what these lawsuits may legally settle. The most important one is whether these cars on Autopilot crashed because the ADAS was broken or because of its limitations. Let's suppose it was a defect. As the autonomous vehicle (AV) safety expert asked on LinkedIn, is labeling Autopilot beta enough to dodge any liabilities derived from that flaw? Courts will decide that, but the fact that Tesla believes this is a legal shield already speaks a bunch.

Imagine that the BEV maker manages to prove Autopilot did not present any defects and that it crashed the cars because of operation limitations. Theoretically, the plaintiffs would be left with no arguments, especially if they blame the alleged flaws on Autopilot for causing the collisions. For the lawsuits to work for them, they should focus on the consequences. In other words, regardless of being a flaw or the ADAS's regular operation, it either caused or did not prevent the crashes, as Tesla exhaustively insists it does.

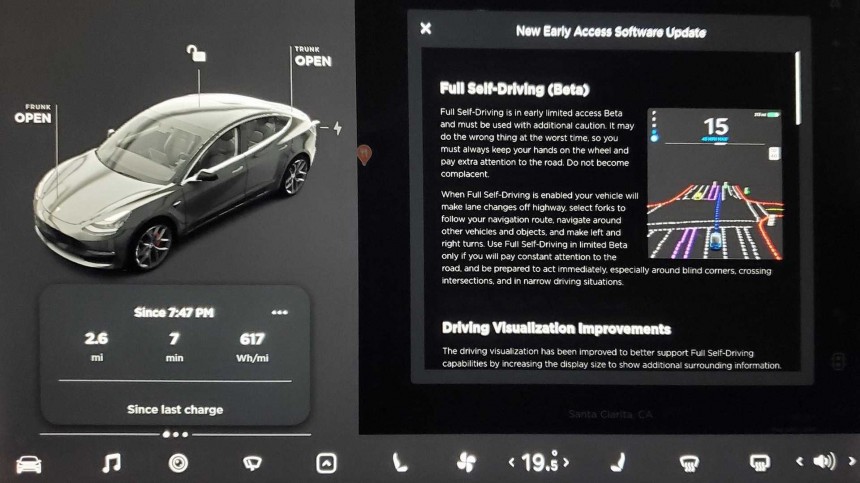

This is part of another controversy involving the BEV maker. Although it swears that Autopilot and Full Self-Driving (FSD) will eventually create robotaxis, it framed these pieces of software as Level 2 technologies. As the associate professor at Carnegie Mellon University stressed, "the current version of SAE J3016 does not require enforcing the operational design domain (ODD) for Level 2." Koopman already proposed a review of the SAE J3016. With Level 2 software, Tesla does not have to submit to several restrictions autonomous vehicle companies have to obey. Take Autopilot and FSD, which are tested by regular customers: AV companies need trained engineers to do these tests.

Curiously, Tesla won a lawsuit against Justine Hsu by arguing she did not respect Autopilot's ODD. According to the company, she used the beta software on city streets when she should only do so on highways. The BEV maker also argued that Hsu was distracted while driving. The airbag in her Model S fractured her jaw, but the jury decided it worked as intended. How can Tesla argue about ODD when its software engages in different environments? How can it be the user's fault when there is nothing that prevents Autopilot from working where it shouldn't? If Tesla refuses to use geofencing to determine Autopilot's and FSD's ODDs, how can its vehicles identify where these ADAS can work?

Whether by defect or by design, these pieces of software are involved in several crashes and deaths. Ashok Elluswamy – director of the Autopilot program since May 2019 – declared under oath that he did not know what ODD and perception-reaction time were. The latter is what allows a driver to recover control from an ADAS before a crash happens. Elluswamy said that lawsuit in the trial of the lawsuit Wei "Walter" Huang's wife filed against Tesla. That imposes another crucial question: if a driver does not have enough time to react, does it even make a difference if they are monitoring Autopilot or FSD?

On March 23, 2018, Huang crashed his Model X on Navigate on Autopilot into a traffic barrier on a California highway and died at the hospital. The National Transportation Safety Board (NTSB) investigated the crash and said a mix of distraction and Autopilot limitations caused Huang's fatal incident. The NTSB determined that Tesla's "ineffective monitoring of driver engagement" was relevant to what happened. Coincidence or not, the National Highway Traffic Safety Administration (NHTSA) recently issued a special order asking Tesla about Elon Mode, which allows ADAS to operate without driver monitoring. The mere existence of such an operation mode demonstrates how Tesla did not give much attention to the NTSB report. The verdict on Huang's case will also be a stepping stone for future lawsuits.

Ironically, Tesla is not the only entity under scrutiny. These collisions have been happening for years, which shows regulators and legislators are either incapable of preventing them or unwilling to do so. Whatever the most damning hypothesis is, the bottom line is that Tesla has been doing whatever it wants when it comes to Autopilot and FSD. While only affected customers wish to rule the company in, any perspective for major changes will rely on the number of lawsuits they are willing to file.

The second lawsuit is with a Florida court. The civil trial will decide in October whether the battery electric vehicle (BEV) maker is responsible for what happened to Stephen Banner. Just like Joshua Brown – the first person to die while using Autopilot – his Model 3 drove under the trailer of a truck and killed him.

In both situations, Tesla denies any defects with the beta software. In other words, it would have worked as intended. On top of that, the BEV maker also accused Lee of driving under the influence of alcohol and denied Autopilot was working when his car crashed. Jonathan Michaels represents the plaintiffs. He said Tesla makes false claims and that its "attempts to blame the victims for their known defective Autopilot system" are "shameful." This is just the beginning of legal battles that may shape all future lawsuits involving any advanced driver assistance systems (ADAS).

Everything changes when a crash happens: Tesla quickly evokes its legal disclaimer, which makes clear that the driver is in charge at all times. Summing up, when a car has no issues, that's because of Autopilot. When it collides, the blame is on the driver. Madeleine Clare Elish defined that as "moral crumple zones," which put users of new technologies to take "the brunt of the moral and legal responsibilities when the overall system malfunctions."

As Elish compared, "while the crumple zone in a car is meant to protect the human driver, the moral crumple zone protects the integrity of the technological system, at the expense of the nearest human operator."

Imagine that the BEV maker manages to prove Autopilot did not present any defects and that it crashed the cars because of operation limitations. Theoretically, the plaintiffs would be left with no arguments, especially if they blame the alleged flaws on Autopilot for causing the collisions. For the lawsuits to work for them, they should focus on the consequences. In other words, regardless of being a flaw or the ADAS's regular operation, it either caused or did not prevent the crashes, as Tesla exhaustively insists it does.

This is part of another controversy involving the BEV maker. Although it swears that Autopilot and Full Self-Driving (FSD) will eventually create robotaxis, it framed these pieces of software as Level 2 technologies. As the associate professor at Carnegie Mellon University stressed, "the current version of SAE J3016 does not require enforcing the operational design domain (ODD) for Level 2." Koopman already proposed a review of the SAE J3016. With Level 2 software, Tesla does not have to submit to several restrictions autonomous vehicle companies have to obey. Take Autopilot and FSD, which are tested by regular customers: AV companies need trained engineers to do these tests.

Whether by defect or by design, these pieces of software are involved in several crashes and deaths. Ashok Elluswamy – director of the Autopilot program since May 2019 – declared under oath that he did not know what ODD and perception-reaction time were. The latter is what allows a driver to recover control from an ADAS before a crash happens. Elluswamy said that lawsuit in the trial of the lawsuit Wei "Walter" Huang's wife filed against Tesla. That imposes another crucial question: if a driver does not have enough time to react, does it even make a difference if they are monitoring Autopilot or FSD?

Ironically, Tesla is not the only entity under scrutiny. These collisions have been happening for years, which shows regulators and legislators are either incapable of preventing them or unwilling to do so. Whatever the most damning hypothesis is, the bottom line is that Tesla has been doing whatever it wants when it comes to Autopilot and FSD. While only affected customers wish to rule the company in, any perspective for major changes will rely on the number of lawsuits they are willing to file.