Costas Lakafossis contacted me about a week after the Wall Street Journal released bombastic news. The newspaper published on August 9 a video of a 2019 Tesla Model X on Autopilot crashing against an emergency vehicle. The engineer and accident investigator told me the footage was "very useful for explaining the issue of Autopilot crashing into the back of emergency vehicles." I asked him to do that, and this will probably help more people understand why the company does not fix it for good. The short answer is Tesla Vision.

Lakafossis is the man who wrote a petition to the National Highway Traffic Safety Administration (NHTSA) about sudden unintended acceleration (SUA) episodes involving Tesla vehicles. The engineer explained in April that Autopilot was involved in a detailed letter to the American regulator. He was also kind enough to explain everything to me, which you can read whenever you want. In May, Tesla dismissed an investigation in China by implementing the very changes the accident investigator proposed. NHTSA has yet to provide Lakafossis with an answer.

Regarding these emergency vehicle crashes, it is clear that the battery electric vehicle (BEV) maker does not fix the problem because of its insistence on Tesla Vision. That stubbornness leads it to a paradoxical situation that prevents it from solving it because it does not want to. Although several autonomous vehicle (AV) specialists have already warned that this is a mistaken approach, the company removed radars and ignored LiDARs – unless it was for validation purposes. Tesla prototypes have been seen more than once with LiDARs.

The 2019 Model X in the WSJ footage has ultrasonic sensors. Tesla started removing them in 2021 – when the crash happened – and disabling them through over-the-air (OTA) updates. It is not clear if the sensors were working on the Model X. If they were, it may be the case that Tesla was already ignoring them for a while. Another possibility is that it just did not manage to integrate the information these sensors provided in a useful way. We'll probably never know. The deal is that they made no difference to prevent the crash. Even if it did, new cars do not have these sensors. As Lakafossis stated, the video helps explain why Tesla Vision cannot deal with emergency vehicles, even if it was not entirely to blame in that specific case.

As the accident investigator clarifies, "you need a basic understanding of the technology behind Tesla Vision and machine-vision concepts in general" to understand "the issue of Tesla cars crashing into emergency vehicles."

"Very briefly: Tesla Vision does not use any type of radar, LiDAR, or other sensor that creates data in the 3D space in front of the car. The only input of the system is a single camera looking straight ahead that records images at a rate of 36 frames per second. When the system is turned on and it 'wakes up' in an unknown location, the only available information is the 2D image that the camera initially sees. It has absolutely no understanding of depth and 3D, so if you start up and select Forward instead of Reverse, of course it will allow you to crash into the wall of the parking garage in front (because it has not realized yet that the camera is actually showing a wall and not an empty parking lot)."

What happens with Tesla Vision is that it initially relies on the driver's perception of 3D scenarios to elaborate its own a bit later.

"In order to create an understanding of the 3D space around it, it needs to start moving around. That's how the machine vision algorithm can start to compare each consecutive frame with the previous one so that it can create a map that assigns each voxel (the equivalent of a pixel in 3D space) with a value and ultimately decide if this voxel is 'occupied' or not."

Lakafossis has a very positive impression of that tech but also some reservations.

"This is a truly fantastic technology that already is extremely useful in various robotic applications, but we need to understand its limits before trusting it with our lives in a car that has no other way of cross-checking its perception of the 3D space in front of it."

This is the core issue with Tesla Vision and Autopilot, which Lakafossis kindly explained with a series of images he elaborated for autoevolution.

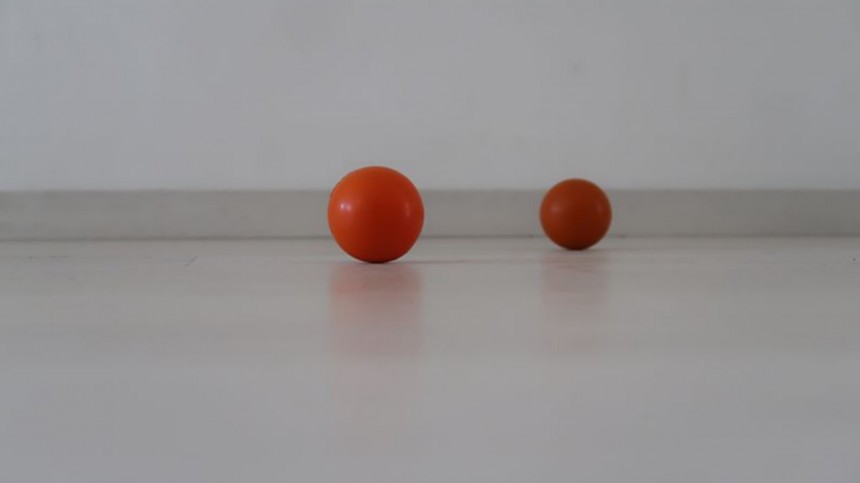

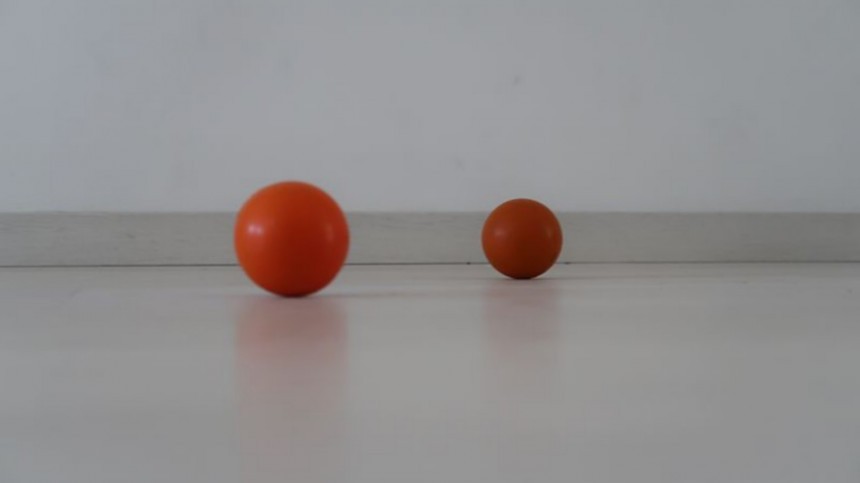

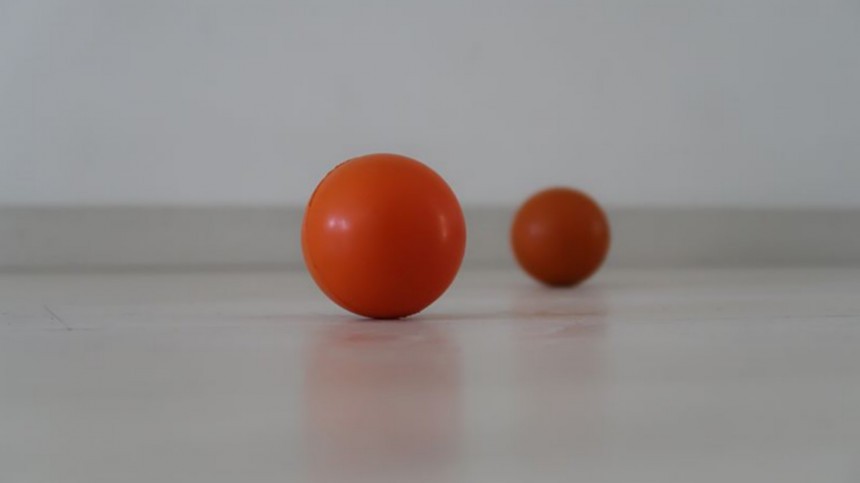

"I am sending you an example of a very simple situation: two orange balls of unknown size, distance, and speed. From the first image, we cannot tell if they are actual 3D objects or if they are simply painted on a white billboard. We need to start moving forward in order to observe Parallax and understand that they are actually objects in 3D space. To create a 3D map in front of us, we need enough images and enough movement, depending on the complexity of the situation (a simple empty parking lot with one or two cars is much faster to interpret than a busy intersection with traffic lights during the night)."

You may see what Lakafossis means in our gallery. He could have sent us a video, but the pictures show how a computer processes images: one by one. If it were a video, it would be frame by frame.

"The AI algorithm is getting better every day using data from actual cars driving around, but it is very important to understand its limits: no matter how good its training is, it can only try to understand what is visible and recognizable in 2D vision. Its only input is a matrix of RGB values against an x,y position of a photo that gets updated at a rate of 36 fps, so we trust our lives in its capability of immediately interpreting these x,y values into an x,y,z value of 'occupied' or 'free.' Maybe this is too much to ask if 99% is not enough, and we really need 100% to be safe."

Apply this concept to the situations in which emergency vehicles got hit by Tesla cars on Autopilot, and you'll realize how inevitable they were with the way the BEV maker developed its tech. Lakafossis elaborated on that.

"If I wanted to fool the machine-vision algorithm, the easiest way to do this would be to choose a dark place and then flash intermittent, very bright lights straight into the camera. The machine-vision system can only interpret what it can see, so if I shine a powerful flashlight into the camera to blind it on purpose, the system will never know if it is a person holding a flashlight, a motorcycle approaching, or a heavy truck stopped in the middle of the street. This is exactly what happens with the bright strobe lights of emergency vehicles."

The accident investigator demonstrated that with screenshots of the video WSJ published.

"It is clear that the change from one frame to the next is too much for the machine-vision software to compare and evaluate (new lights come on and off in different parts of the frame, different flashes of light glow and paint large parts of the 2D frame in different colors that were not there in the previous frame, etc.). Remember that the algorithm compares each frame with the previous one in order to evaluate the change in the 3D scenery (by using 2D values and the rate of change for each value). The flashing colored lights create so much change from frame to frame that it is impossible to compare anything."

Summing that up, Lakafossis says that Tesla Vision is blind to flashing lights. Some redundancy – such as LiDAR or other sensors that helped the computer create a 3D scenario – could help reduce or prevent that danger, but Tesla has already made it clear that it will never back down. After all, Elon Musk thinks humans work with two cameras (the eyes), so his cars have to do the same. The problem is that he insists they can do that right now, going against the opinion of his own engineers: they wanted to keep ultrasonic sensors. Lakafossis argues that it would be easier if Tesla just admitted how incompetent the system is to deal with emergency vehicles.

"While the legislators are still investigating the issue, people continue to get killed or seriously injured by this exact type of accident that has happened 16 or 17 times already. I think that someone needs to say to Tesla drivers very clearly: 'If you see any kind of flashing lights ahead, GRAB THE WHEEL IMMEDIATELY and take avoiding action because Autopilot is not capable of recognizing an emergency vehicle with flashing strobe lights.' This is not just a theory; this is something rather obvious to anyone who has any understanding of machine-vision concepts, and, anyway, it is illustrated beyond any doubt in the WSJ footage."

The engineer knows that Tesla has legally protected itself with its disclaimers and the weird willingness that its customers have to test its vehicles for free. Still, the BEV maker's liabilities are far from the only concern in these crashes: protecting lives is the core matter here.

"I understand that the drivers should have read the fine print and should have been more careful, but accidents have happened, and people have been killed. So, obviously, we need to do more in order to prevent the next accident. And let's not forget that this is a very dangerous situation for police, paramedics, and all kinds of emergency responders who routinely risk their lives in their course of duty to help the rest of us in times of need. It is already very cruel to say, 'OK, the driver of the car made a mistake and paid for it,' but let's not forget that an emergency vehicle is not there by itself: there are other people who are involved as victims in these accidents."

Now that you also have a basic understanding of machine-vision concepts, you'll know what's behind new crashes involving emergency vehicles and Tesla cars on Autopilot. That may be particularly helpful if you own a Tesla and love the advanced driver assistance system (ADAS). If that's the case, following Lakafossis' advice will not hurt: it may prevent "an accident caused by a driver trusting the technology too much, despite the disclaimers in the fine print."

Regarding these emergency vehicle crashes, it is clear that the battery electric vehicle (BEV) maker does not fix the problem because of its insistence on Tesla Vision. That stubbornness leads it to a paradoxical situation that prevents it from solving it because it does not want to. Although several autonomous vehicle (AV) specialists have already warned that this is a mistaken approach, the company removed radars and ignored LiDARs – unless it was for validation purposes. Tesla prototypes have been seen more than once with LiDARs.

As the accident investigator clarifies, "you need a basic understanding of the technology behind Tesla Vision and machine-vision concepts in general" to understand "the issue of Tesla cars crashing into emergency vehicles."

"Very briefly: Tesla Vision does not use any type of radar, LiDAR, or other sensor that creates data in the 3D space in front of the car. The only input of the system is a single camera looking straight ahead that records images at a rate of 36 frames per second. When the system is turned on and it 'wakes up' in an unknown location, the only available information is the 2D image that the camera initially sees. It has absolutely no understanding of depth and 3D, so if you start up and select Forward instead of Reverse, of course it will allow you to crash into the wall of the parking garage in front (because it has not realized yet that the camera is actually showing a wall and not an empty parking lot)."

"In order to create an understanding of the 3D space around it, it needs to start moving around. That's how the machine vision algorithm can start to compare each consecutive frame with the previous one so that it can create a map that assigns each voxel (the equivalent of a pixel in 3D space) with a value and ultimately decide if this voxel is 'occupied' or not."

Lakafossis has a very positive impression of that tech but also some reservations.

"This is a truly fantastic technology that already is extremely useful in various robotic applications, but we need to understand its limits before trusting it with our lives in a car that has no other way of cross-checking its perception of the 3D space in front of it."

"I am sending you an example of a very simple situation: two orange balls of unknown size, distance, and speed. From the first image, we cannot tell if they are actual 3D objects or if they are simply painted on a white billboard. We need to start moving forward in order to observe Parallax and understand that they are actually objects in 3D space. To create a 3D map in front of us, we need enough images and enough movement, depending on the complexity of the situation (a simple empty parking lot with one or two cars is much faster to interpret than a busy intersection with traffic lights during the night)."

You may see what Lakafossis means in our gallery. He could have sent us a video, but the pictures show how a computer processes images: one by one. If it were a video, it would be frame by frame.

"The AI algorithm is getting better every day using data from actual cars driving around, but it is very important to understand its limits: no matter how good its training is, it can only try to understand what is visible and recognizable in 2D vision. Its only input is a matrix of RGB values against an x,y position of a photo that gets updated at a rate of 36 fps, so we trust our lives in its capability of immediately interpreting these x,y values into an x,y,z value of 'occupied' or 'free.' Maybe this is too much to ask if 99% is not enough, and we really need 100% to be safe."

"If I wanted to fool the machine-vision algorithm, the easiest way to do this would be to choose a dark place and then flash intermittent, very bright lights straight into the camera. The machine-vision system can only interpret what it can see, so if I shine a powerful flashlight into the camera to blind it on purpose, the system will never know if it is a person holding a flashlight, a motorcycle approaching, or a heavy truck stopped in the middle of the street. This is exactly what happens with the bright strobe lights of emergency vehicles."

The accident investigator demonstrated that with screenshots of the video WSJ published.

"It is clear that the change from one frame to the next is too much for the machine-vision software to compare and evaluate (new lights come on and off in different parts of the frame, different flashes of light glow and paint large parts of the 2D frame in different colors that were not there in the previous frame, etc.). Remember that the algorithm compares each frame with the previous one in order to evaluate the change in the 3D scenery (by using 2D values and the rate of change for each value). The flashing colored lights create so much change from frame to frame that it is impossible to compare anything."

"While the legislators are still investigating the issue, people continue to get killed or seriously injured by this exact type of accident that has happened 16 or 17 times already. I think that someone needs to say to Tesla drivers very clearly: 'If you see any kind of flashing lights ahead, GRAB THE WHEEL IMMEDIATELY and take avoiding action because Autopilot is not capable of recognizing an emergency vehicle with flashing strobe lights.' This is not just a theory; this is something rather obvious to anyone who has any understanding of machine-vision concepts, and, anyway, it is illustrated beyond any doubt in the WSJ footage."

"I understand that the drivers should have read the fine print and should have been more careful, but accidents have happened, and people have been killed. So, obviously, we need to do more in order to prevent the next accident. And let's not forget that this is a very dangerous situation for police, paramedics, and all kinds of emergency responders who routinely risk their lives in their course of duty to help the rest of us in times of need. It is already very cruel to say, 'OK, the driver of the car made a mistake and paid for it,' but let's not forget that an emergency vehicle is not there by itself: there are other people who are involved as victims in these accidents."

Now that you also have a basic understanding of machine-vision concepts, you'll know what's behind new crashes involving emergency vehicles and Tesla cars on Autopilot. That may be particularly helpful if you own a Tesla and love the advanced driver assistance system (ADAS). If that's the case, following Lakafossis' advice will not hurt: it may prevent "an accident caused by a driver trusting the technology too much, despite the disclaimers in the fine print."