The world is again abuzz about a Tesla recall, this time affecting over two million vehicles. Despite what the word "recall" implies, this is simply a software update to prevent drivers from abusing the Autosteer system. All vehicles equipped with a cabin camera are now receiving the fix with the 2023.44.30 update, with the rest of the Tesla fleet following at a later date.

As I wrote in an earlier article, Tesla agreed on December 12 to a voluntary software recall to address driver abuse of Autosteer. This Autopilot component keeps the vehicles centered in the lane. The announcement caused an uproar on Wall Street and in the media, with some outlets posting alarmist headlines like "Tesla recalls more than 2 million cars over steering issue" (Consumer Affairs) or "Elon Musk's Tesla recalls two million cars in the US over Autopilot defect" (BBC).

The truth is that Tesla steering systems are perfectly fine, and so is Autopilot. This is not an issue that could put you in danger when you drive your car. The only thing that this software recall changes is to make it harder for drivers to abuse the Autopilot and, specifically, the Autosteer. In other words, to force a safety measure on drivers who don't care much about their safety. It's like making sure a knife only cuts bread and nothing else, only in software.

Tesla fans have been fretting about the use of the term recall in the past when the remedy was offered with a software update. They are even more vocal now since the NHTSA did not talk about restricting any of the Autopilot features, and the words Full Self-Driving or FSD have not been mentioned once in the communications sent to the public. This time, Tesla issued a software update to make it harder for humans to abuse the Autosteer, that is, trying to kill themselves by deliberately circumventing the baked-in safety checks.

As Tesla warns users, the Autopilot and even the FSD capability are Level-2 driver assist systems, which means the driver is in control of the car and responsible for everything that happens while driving. Some don't get that and use loopholes in the system to force the car to continue to operate, even when the driver is not paying attention. Tesla agreed to update its vehicles to make it more complicated (or even impossible) to do that in the future.

The software update 2023.44.30 is designed to address this in the case of Tesla EVs fitted with a cabin camera. Older vehicles that don't have a cabin camera will receive another over-the-air update at a later time. This doesn't mean that they are unsafe to drive, just that they can be more easily tricked into thinking the driver is paying attention when they're not.

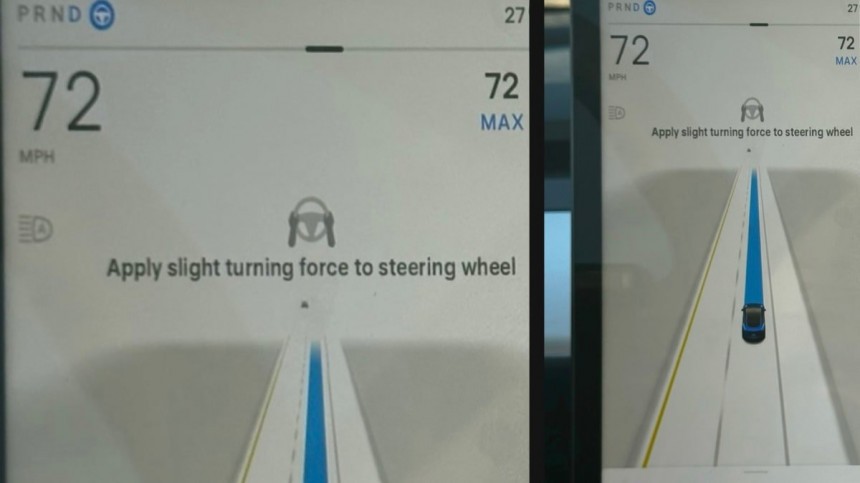

The new update, which has already been pushed to vehicles at the time of writing, will introduce enhanced controls and alerts for Autosteer engagement to ensure the driver remains alert and responsible at all times. The visual alerts are also more intrusive by displaying at the top of the screen instead of at the bottom to improve awareness.

Tesla also introduced new checks during Autosteer engagement, so you may encounter occasions when the Autosteer is not available or refuses to engage. "If the driver attempts to engage Autosteer when conditions are not met for engagement, the feature will alert the driver it is unavailable through visual and audible alerts, and Autosteer will not engage," reads Tesla statement. The car may even restrict speed and warn the driver to immediately intervene if it detects that the Autosteer functionality may be limited "due to environmental or other circumstances."

In extreme cases, drivers who "repeatedly fail to demonstrate continuous and sustained driving responsibility while the feature is engaged" will be locked out of Autosteer use entirely. Tesla already uses such a "punishment" for those who abuse the FSD Beta software. In that case, the FSD Beta use is suspended after five strikes, and it's reinstated after two weeks.

The truth is that Tesla steering systems are perfectly fine, and so is Autopilot. This is not an issue that could put you in danger when you drive your car. The only thing that this software recall changes is to make it harder for drivers to abuse the Autopilot and, specifically, the Autosteer. In other words, to force a safety measure on drivers who don't care much about their safety. It's like making sure a knife only cuts bread and nothing else, only in software.

Tesla fans have been fretting about the use of the term recall in the past when the remedy was offered with a software update. They are even more vocal now since the NHTSA did not talk about restricting any of the Autopilot features, and the words Full Self-Driving or FSD have not been mentioned once in the communications sent to the public. This time, Tesla issued a software update to make it harder for humans to abuse the Autosteer, that is, trying to kill themselves by deliberately circumventing the baked-in safety checks.

As Tesla warns users, the Autopilot and even the FSD capability are Level-2 driver assist systems, which means the driver is in control of the car and responsible for everything that happens while driving. Some don't get that and use loopholes in the system to force the car to continue to operate, even when the driver is not paying attention. Tesla agreed to update its vehicles to make it more complicated (or even impossible) to do that in the future.

The new update, which has already been pushed to vehicles at the time of writing, will introduce enhanced controls and alerts for Autosteer engagement to ensure the driver remains alert and responsible at all times. The visual alerts are also more intrusive by displaying at the top of the screen instead of at the bottom to improve awareness.

Tesla also introduced new checks during Autosteer engagement, so you may encounter occasions when the Autosteer is not available or refuses to engage. "If the driver attempts to engage Autosteer when conditions are not met for engagement, the feature will alert the driver it is unavailable through visual and audible alerts, and Autosteer will not engage," reads Tesla statement. The car may even restrict speed and warn the driver to immediately intervene if it detects that the Autosteer functionality may be limited "due to environmental or other circumstances."

In extreme cases, drivers who "repeatedly fail to demonstrate continuous and sustained driving responsibility while the feature is engaged" will be locked out of Autosteer use entirely. Tesla already uses such a "punishment" for those who abuse the FSD Beta software. In that case, the FSD Beta use is suspended after five strikes, and it's reinstated after two weeks.