In all honesty, the current batch of self-driving cars is nothing but a bunch of hi-tech moles. They’re as blind as a bat, to keep the zoological references flowing, and instead rely on all kinds of sensors that bounce off the surrounding objects to get an idea of what’s around them. Much like a dolphin.

But that may soon change, as very high computing power is allowing researchers and scientists (and independent boy-geniuses) to come up with a new solution for solving the autonomous cars’ awareness problems using just a few cheap video cameras. Just like the ones in your expensive smartphone.

This new technique is basically teaching cars how to see just like we do. You weren’t born knowing that the long strip of asphalt is called a "road," were you? Nope, you too had to be told. The only difference is that, in the vehicle’s case, the process is a bit more complicated, taking the car lots of gigabytes of images until it can make correct assumptions on what it’s dealing with.

This system isn’t just a lot cheaper, it also doesn’t need any GPS connection and it works perfectly fine no matter if it’s bright daylight or starry night. The developers don’t say anything about it, but just like a human driver would have to slow down when driving through dense fog or heavy rain or snow, the autopilot using this system would probably do the same, if not stop altogether.

The system called SegNet is taught to label what it sees in 12 different categories such as roads, buildings, poles, street signs, pedestrians or cyclists. It works in real time, and it can evaluate any new image instantly with an accuracy of at least 90%. That means that 90 percent of the image’s pixels will be correctly assigned to one of the twelve categories. Don’t believe it? Give it a try here.

This has been achieved by manually labeling 5,000 images by a group of Cambridge undergraduate students, with each one taking about 30 minutes. That’s 2,500 man-hours of tedious pixel-level work, but one that might eventually pay off. The team started off with urban and highway environments, but it will soon expand to cover rural scenes as well.

SegNet’s creators, Professor Roberto Cipolla and Ph.D. student Alex Kendall, think that there’s still some time until driverless cars could take full advantage of this system and use it to navigate around. However, it is all but ready to be implemented as a warning system.

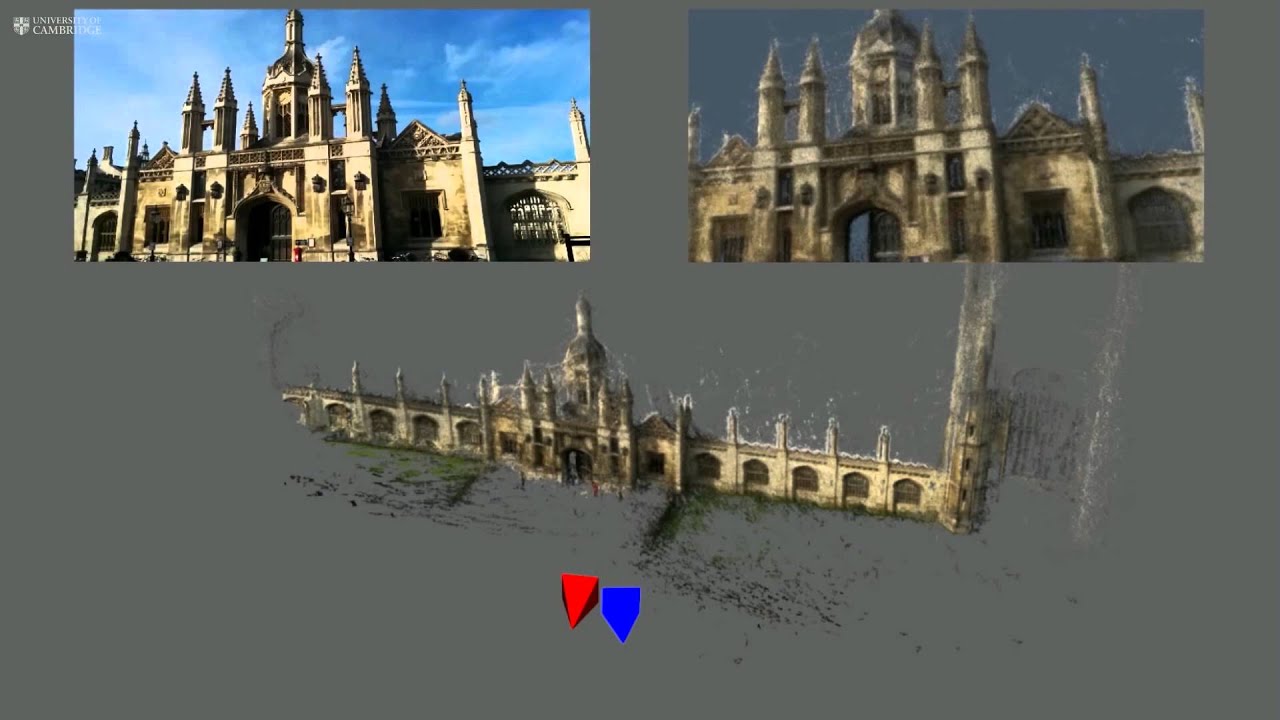

The team of two from the University of Cambridge also came up with another use for their development: precise localization. Their system is not only more accurate than GPS, but it also works where the satellite system cannot, such as indoors or in tunnels. It provides info on location and orientation with a deviation of only a few meters and a few degrees. Of course, the area has to be mapped in advance, but with all the roads accounted for by Google, that’s not really a problem. You can also give it a try here.

The best thing about autonomous cars isn’t that one day we’ll get to take a nap while our car drives us home on itself - OK, that sounds pretty awesome as well -, but the impressive advancements in AI they’ve generated. If you think about it, a driverless car could very well be called a personal mobility robot, which instantly makes it a lot cooler. And scarier.

You can read more about what the people at the University of Cambridge are up to on their website.

This new technique is basically teaching cars how to see just like we do. You weren’t born knowing that the long strip of asphalt is called a "road," were you? Nope, you too had to be told. The only difference is that, in the vehicle’s case, the process is a bit more complicated, taking the car lots of gigabytes of images until it can make correct assumptions on what it’s dealing with.

This system isn’t just a lot cheaper, it also doesn’t need any GPS connection and it works perfectly fine no matter if it’s bright daylight or starry night. The developers don’t say anything about it, but just like a human driver would have to slow down when driving through dense fog or heavy rain or snow, the autopilot using this system would probably do the same, if not stop altogether.

The system called SegNet is taught to label what it sees in 12 different categories such as roads, buildings, poles, street signs, pedestrians or cyclists. It works in real time, and it can evaluate any new image instantly with an accuracy of at least 90%. That means that 90 percent of the image’s pixels will be correctly assigned to one of the twelve categories. Don’t believe it? Give it a try here.

This has been achieved by manually labeling 5,000 images by a group of Cambridge undergraduate students, with each one taking about 30 minutes. That’s 2,500 man-hours of tedious pixel-level work, but one that might eventually pay off. The team started off with urban and highway environments, but it will soon expand to cover rural scenes as well.

SegNet’s creators, Professor Roberto Cipolla and Ph.D. student Alex Kendall, think that there’s still some time until driverless cars could take full advantage of this system and use it to navigate around. However, it is all but ready to be implemented as a warning system.

The team of two from the University of Cambridge also came up with another use for their development: precise localization. Their system is not only more accurate than GPS, but it also works where the satellite system cannot, such as indoors or in tunnels. It provides info on location and orientation with a deviation of only a few meters and a few degrees. Of course, the area has to be mapped in advance, but with all the roads accounted for by Google, that’s not really a problem. You can also give it a try here.

The best thing about autonomous cars isn’t that one day we’ll get to take a nap while our car drives us home on itself - OK, that sounds pretty awesome as well -, but the impressive advancements in AI they’ve generated. If you think about it, a driverless car could very well be called a personal mobility robot, which instantly makes it a lot cooler. And scarier.

You can read more about what the people at the University of Cambridge are up to on their website.