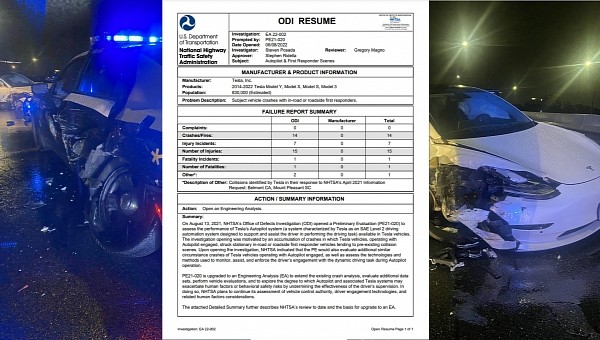

Anyone checking the Part 573 Safety Recall Report 23V-085 or the letter Tesla sent the National Highway Traffic Safety Administration (NHTSA) will say the EV maker decided to make the recall they refer to. Talking to the safety agency, autoevolution found out it is a bit more complicated than that: the recall is the first effect of EA22-002.

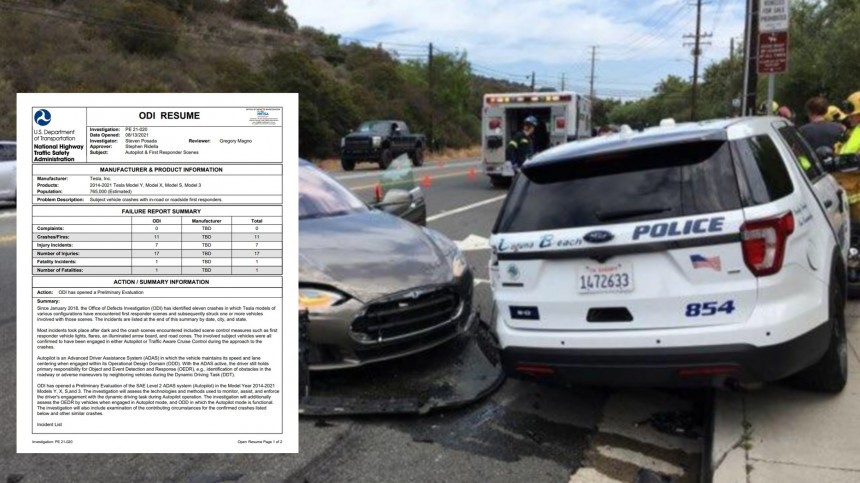

This code refers to the engineering analysis NHTSA opened in June 2022 as the next step on the preliminary analysis the safety agency opened on August 13, 2021. The PE21-020 emerged to check why Tesla vehicles using Autopilot were involved in 11 crashes against emergency vehicles. These collisions injured 17 people and killed one. Before PE21-020 turned into EA22-002, NHTSA had included five more impacts in the preliminary analysis.

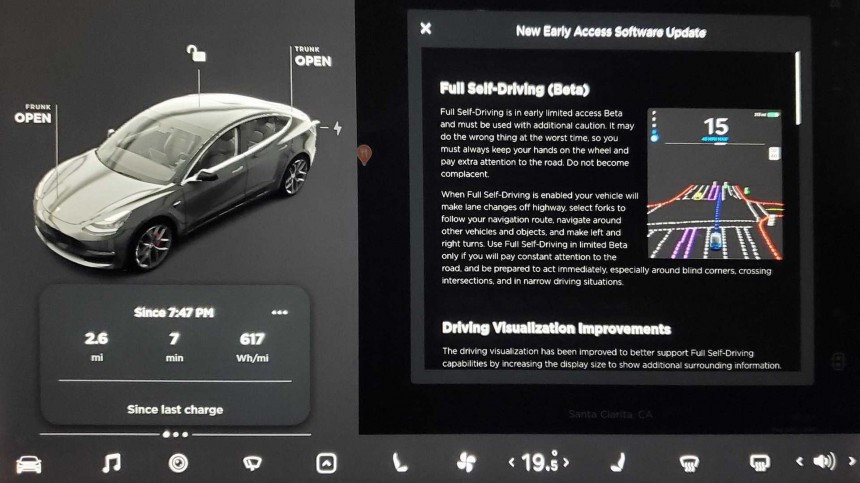

You may be asking: why did an engineering analysis on Autopilot end up with an FSD recall? That shows NHTSA is doing a more thorough evaluation of all Tesla advanced driver assistance systems and that they are more connected than we could imagine by how the EV maker treats them. Nowadays, Tesla charges $15,000 for the right to test its beta software.

The safety agency told autoevolution that it detected that Autosteer on City Streets “led to an unreasonable risk to motor vehicle safety based on insufficient adherence to traffic safety laws.” While it seems Tesla discussed the matter with NHTSA and decided the recall was the right thing to do, the safety agency framed that in another way. According to NHTSA, Tesla did so “as required by law.”

Predictably, some tried to argue that an over-the-air (OTA) update was not a recall. They either fail to grasp or choose to ignore that FSD itself is a piece of software and that nothing apart from an update would manage to fix it. Any repair that involves safety is a recall, regardless of how trivial or easy it may seem. More than that, FSD is beta software, which astonishes safety experts regarding how NHTSA lets a company test it with the general public. If anything goes wrong with FSD, people may die.

This is what Philip Koopman shared on his LinkedIn page. According to the autonomous vehicle safety specialist, he is “shocked that NHTSA is letting known dangerous testing continue on public roads pending the update. They should stand down testing until the remedy is implemented (i.e., temporarily turn off FSD beta until updating). What is the possible excuse to permit an optional add-on feature to keep operating when subject to a serious recall like this?”

Koopman also gave more concrete examples of what this FSD flaw involves. Not by chance, it has to do with something people on the Autopilot team said under oath that they know nothing about: perception-reaction time. That’s the very thing that will allow a driver to recover control before they crash.

“Drivers might not have enough time to react to prevent dangerous driving such as running stale yellow lights, not having enough time to react to entering an intersection in a dangerous situation from a stop sign, conformance to variable-speed limits, and exiting a turn-only lane when intending to go straight.”

The associate professor at Carnegie Mellon University added that “NHTSA propagates the autonowashing of FSD by calling a public road test SAE Level 3/4 feature a Level 2 feature. They should (and do) know better. Have to wonder if that was a concession they made to Tesla as part of negotiating a voluntary recall. Disappointing to see this.”

Ironically, autonowashing is trying to make something look more autonomous than it really is. To authorities, Tesla does the opposite: it states its software is just an ADAS, when Musk publicly bragged FSD’s goal was to deliver 1 million robotaxis by 2020. The fact that it did not work is no excuse to treat it differently than other autonomous software testing occurring around the U.S. Companies like Waymo and Cruise have to apply for special authorization to run their tests on public roads. Tesla dodged that by stating FSD and Autopilot are only Level 2.

In Koopman’s opinion, at least, “this is a much more powerful statement by NHTSA than we've seen in the past that saying software is 'beta' does not shield a company from recalls.” The safety agency also said that it “will continue to monitor the recall remedies for effectiveness.”

Tesla investors hoping this recall will kill the EA22-002 must hold their horses. According to the safety agency, the action “does not address the full scope of NHTSA’s EA22-002 investigation as articulated in the opening resume. Accordingly, NHTSA’s investigation into Tesla’s Autopilot and associated vehicle systems remains open and active.”

In other words, this recall is probably just the beginning: there’s more to come. NHTSA now has a better understanding of the system’s performance. However, Koopman’s doubt is still valid: how does the safety agency allow a car company to test safety-critical beta software on public roads with regular customers? NHTSA may have started to answer.

You may be asking: why did an engineering analysis on Autopilot end up with an FSD recall? That shows NHTSA is doing a more thorough evaluation of all Tesla advanced driver assistance systems and that they are more connected than we could imagine by how the EV maker treats them. Nowadays, Tesla charges $15,000 for the right to test its beta software.

Predictably, some tried to argue that an over-the-air (OTA) update was not a recall. They either fail to grasp or choose to ignore that FSD itself is a piece of software and that nothing apart from an update would manage to fix it. Any repair that involves safety is a recall, regardless of how trivial or easy it may seem. More than that, FSD is beta software, which astonishes safety experts regarding how NHTSA lets a company test it with the general public. If anything goes wrong with FSD, people may die.

Koopman also gave more concrete examples of what this FSD flaw involves. Not by chance, it has to do with something people on the Autopilot team said under oath that they know nothing about: perception-reaction time. That’s the very thing that will allow a driver to recover control before they crash.

“Drivers might not have enough time to react to prevent dangerous driving such as running stale yellow lights, not having enough time to react to entering an intersection in a dangerous situation from a stop sign, conformance to variable-speed limits, and exiting a turn-only lane when intending to go straight.”

Ironically, autonowashing is trying to make something look more autonomous than it really is. To authorities, Tesla does the opposite: it states its software is just an ADAS, when Musk publicly bragged FSD’s goal was to deliver 1 million robotaxis by 2020. The fact that it did not work is no excuse to treat it differently than other autonomous software testing occurring around the U.S. Companies like Waymo and Cruise have to apply for special authorization to run their tests on public roads. Tesla dodged that by stating FSD and Autopilot are only Level 2.

Tesla investors hoping this recall will kill the EA22-002 must hold their horses. According to the safety agency, the action “does not address the full scope of NHTSA’s EA22-002 investigation as articulated in the opening resume. Accordingly, NHTSA’s investigation into Tesla’s Autopilot and associated vehicle systems remains open and active.”

In other words, this recall is probably just the beginning: there’s more to come. NHTSA now has a better understanding of the system’s performance. However, Koopman’s doubt is still valid: how does the safety agency allow a car company to test safety-critical beta software on public roads with regular customers? NHTSA may have started to answer.