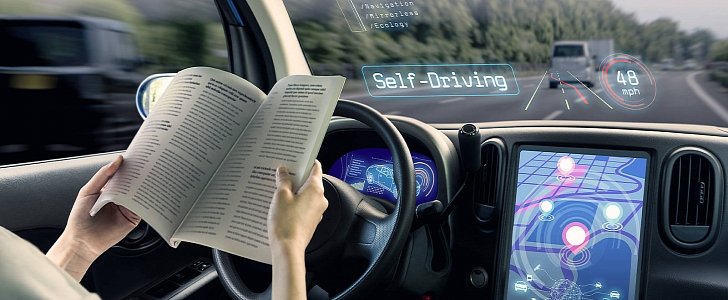

Despite companies advocating the need for self-driving cars based on several projections, public trust in cars that drive themselves is still very low. One main reason for this is that people believes, cars malfunction, or could be hacked, or even tricked into doing things they would not normally do.

GARD is a program that was just announced by DARPA this week. It stands for Guaranteeing AI Robustness against Deception, and its main goal is to prevent machine-learning (ML) systems from being altered in any malicious way.

As an example for the need for the GARD program, DARPA cites a case when a self-driving car was tricked by means of visual alterations into seeing a stop sign as a 45 mph speed limit posting.

This manipulation can easily be done without access to the ML itself. By employing rather low-tech tools, one could alter the way in which street signs, for instance, look, so that the car misinterprets them and decides on what it believes is the appropriate course of action.

The agency warns that if this were to happen in the real world, “the self-driving car would accelerate through the stop sign, potentially causing a disastrous outcome.“

DARPA calls this type of activity adversarial deception attack and plans to fight it with the GARD program.

Meant for machine-learning technologies from various fields, not only the ones fitted inside cars, GARD will at first focus on image-based ML and then move on to video, audio and even more complex systems.

“There is a critical need for ML defense as the technology is increasingly incorporated into some of our most critical infrastructures,” said in a statement Hava Siegelmann, program manager in DARPA’s Information Innovation Office.

“The GARD program seeks to prevent the chaos that could ensue in the near future when attack methodologies, now in their infancy, have matured to a more destructive level. We must ensure ML is safe and incapable of being deceived.”

As an example for the need for the GARD program, DARPA cites a case when a self-driving car was tricked by means of visual alterations into seeing a stop sign as a 45 mph speed limit posting.

This manipulation can easily be done without access to the ML itself. By employing rather low-tech tools, one could alter the way in which street signs, for instance, look, so that the car misinterprets them and decides on what it believes is the appropriate course of action.

The agency warns that if this were to happen in the real world, “the self-driving car would accelerate through the stop sign, potentially causing a disastrous outcome.“

DARPA calls this type of activity adversarial deception attack and plans to fight it with the GARD program.

Meant for machine-learning technologies from various fields, not only the ones fitted inside cars, GARD will at first focus on image-based ML and then move on to video, audio and even more complex systems.

“There is a critical need for ML defense as the technology is increasingly incorporated into some of our most critical infrastructures,” said in a statement Hava Siegelmann, program manager in DARPA’s Information Innovation Office.

“The GARD program seeks to prevent the chaos that could ensue in the near future when attack methodologies, now in their infancy, have matured to a more destructive level. We must ensure ML is safe and incapable of being deceived.”